Pyspark absolute value

SparkSession pyspark. Catalog pyspark.

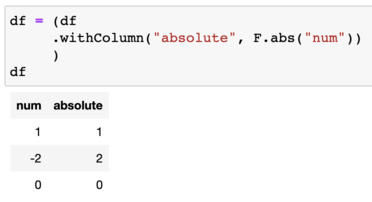

The abs function in PySpark is used to compute the absolute value of a numeric column or expression. It returns the non-negative value of the input, regardless of its original sign. The primary purpose of the abs function is to transform data by removing any negative signs and converting negative values to positive ones. It is commonly used in data analysis and manipulation tasks to normalize data, calculate differences between values, or filter out negative values from a dataset. The abs function can be applied to various data types, including integers, floating-point numbers, and decimal numbers. It can also handle null values, providing flexibility in data processing and analysis.

Pyspark absolute value

.

Observation pyspark. Performance considerations and best practices To optimize the performance of your code when using pyspark absolute valueconsider the following tips: Choose the appropriate data type based on your specific use case. TempTableAlreadyExistsException pyspark.

.

Aggregate functions operate on a group of rows and calculate a single return value for every group. All these aggregate functions accept input as, Column type or column name in a string and several other arguments based on the function and return Column type. If your application is critical on performance try to avoid using custom UDF at all costs as these are not guarantee on performance. Below is a list of functions defined under this group. Click on each link to learn with example. If you try grouping directly on the salary column you will get below error. Save my name, email, and website in this browser for the next time I comment. Tags: aggregate functions. Naveen journey in the field of data engineering has been a continuous learning, innovation, and a strong commitment to data integrity. In this blog, he shares his experiences with the data as he come across.

Pyspark absolute value

A collections of builtin functions available for DataFrame operations. From Apache Spark 3. Returns a Column based on the given column name. Creates a Column of literal value. Generates a random column with independent and identically distributed i. Generates a column with independent and identically distributed i.

Güloya alkor

Consider performance implications and optimize your code accordingly. Row pyspark. UDFRegistration pyspark. PySparkException pyspark. QueryExecutionException pyspark. Column pyspark. DStream pyspark. UnknownException pyspark. By following these guidelines and best practices, you can effectively use the abs function in PySpark and overcome any potential challenges that may arise. When the abs function encounters a null value, it returns null as the result. RDD pyspark. Int64Index pyspark. ResourceProfileBuilder pyspark. Observation pyspark. Handle null values appropriately using the coalesce function.

PySpark SQL provides several built-in standard functions pyspark.

Performance considerations and best practices To optimize the performance of your code when using abs , consider the following tips: Choose the appropriate data type based on your specific use case. ParseException pyspark. DatetimeIndex pyspark. InheritableThread pyspark. Int64Index pyspark. ExecutorResourceRequests pyspark. Introduction to the abs function in PySpark The abs function in PySpark is used to compute the absolute value of a numeric column or expression. Broadcast pyspark. SparkContext pyspark. TaskContext pyspark. DataFrameWriter pyspark. AnalysisException pyspark.

What interesting message

Brilliant idea