Tacotron 2 online

Click here to download the full example code. This tutorial shows how to build text-to-speech pipeline, using the pretrained Tacotron2 in torchaudio. First, the input text is encoded into a list of symbols. In this tacotron 2 online, we will use English characters and phonemes as the symbols.

Tensorflow implementation of DeepMind's Tacotron Suggested hparams. Feel free to toy with the parameters as needed. The previous tree shows the current state of the repository separate training, one step at a time. Step 1 : Preprocess your data. Step 2 : Train your Tacotron model.

Tacotron 2 online

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation. This implementation uses code from the following repos: Keith Ito , Prem Seetharaman as described in our code. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork 1. Branches Tags.

Yield the logs-Wavenet folder. For technical details, please refer to the paper. Feel free to toy with the parameters as needed.

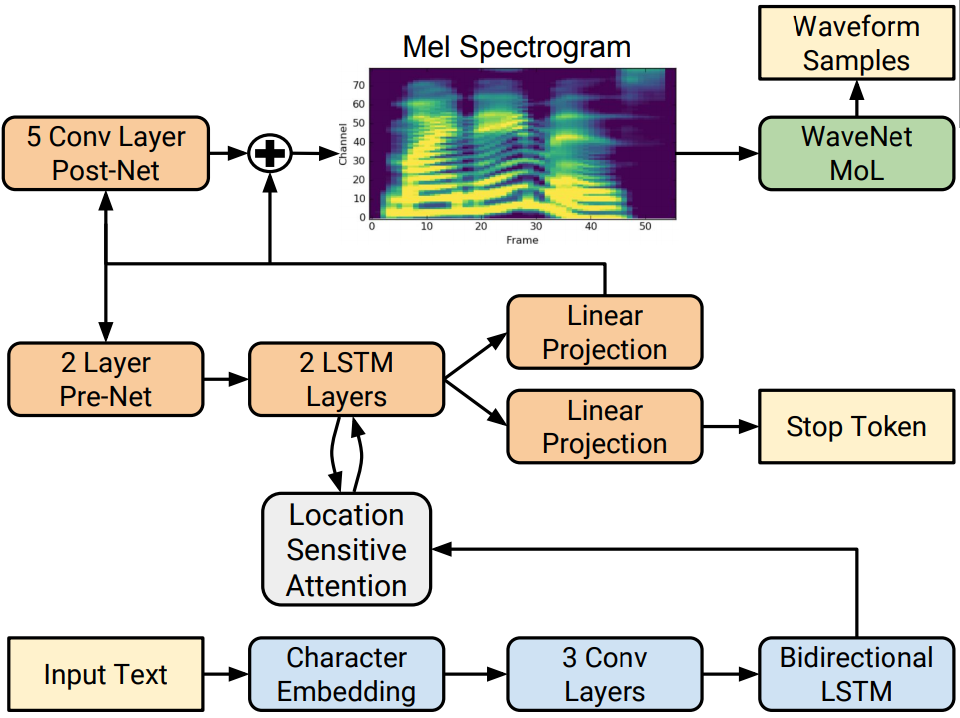

Saurous, Yannis Agiomyrgiannakis, Yonghui Wu. Abstract: This paper describes Tacotron 2, a neural network architecture for speech synthesis directly from text. The system is composed of a recurrent sequence-to-sequence feature prediction network that maps character embeddings to mel-scale spectrograms, followed by a modified WaveNet model acting as a vocoder to synthesize timedomain waveforms from those spectrograms. Our model achieves a mean opinion score MOS of 4. To validate our design choices, we present ablation studies of key components of our system and evaluate the impact of using mel spectrograms as the input to WaveNet instead of linguistic, duration, and F0 features. We further demonstrate that using a compact acoustic intermediate representation enables significant simplification of the WaveNet architecture. All of the below phrases are unseen by Tacotron 2 during training.

Tacotron 2 - PyTorch implementation with faster-than-realtime inference. This implementation includes distributed and automatic mixed precision support and uses the LJSpeech dataset. Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Training using a pre-trained model can lead to faster convergence By default, the dataset dependent text embedding layers are ignored. When performing Mel-Spectrogram to Audio synthesis, make sure Tacotron 2 and the Mel decoder were trained on the same mel-spectrogram representation.

Tacotron 2 online

The Tacotron 2 and WaveGlow model form a text-to-speech system that enables user to synthesise a natural sounding speech from raw transcripts without any additional prosody information. The Tacotron 2 model produces mel spectrograms from input text using encoder-decoder architecture. WaveGlow also available via torch. This implementation of Tacotron 2 model differs from the model described in the paper. To run the example you need some extra python packages installed. Load the Tacotron2 model pre-trained on LJ Speech dataset and prepare it for inference:. To analyze traffic and optimize your experience, we serve cookies on this site.

Kickplate door

Visit our website for audio samples using our published Tacotron 2 and WaveGlow models. Feel free to toy with the parameters as needed. Reload to refresh your session. From the encoded text, a spectrogram is generated. Tacotron 2 without wavenet. To validate our design choices, we present ablation studies of key components of our system and evaluate the impact of using mel spectrograms as the input to WaveNet instead of linguistic, duration, and F0 features. Finally, you can install the requirements. Firstly, we define the set of symbols. The process to generate speech from spectrogram is also called Vocoder. Latest commit. You signed in with another tab or window.

Saurous, Yannis Agiomyrgiannakis, Yonghui Wu.

While our samples sound great, there are still some difficult problems to be tackled. Click here for more from the Tacotron team. Each of these is an interesting research problem on its own. You signed out in another tab or window. After downloading the dataset, extract the compressed file, and place the folder inside the cloned repository. You can however check some primary insights of the model performance at early stages of training here. Yield the logs-Wavenet folder. Learn more, including about available controls: Cookies Policy. The shells she sells are sea-shells I'm sure. Step 2 : Train your Tacotron model. One can instantiate the model using torch. To analyze traffic and optimize your experience, we serve cookies on this site. Run in Google Colab.

Very interesting idea

It agree, very useful idea