Spark read csv

Spark SQL provides spark, spark read csv. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications.

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark. DataFrame pyspark.

Spark read csv

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, multiple files, and all files from a local directory into Spark DataFrame , apply some transformations, and finally write DataFrame back to a CSV file using Scala. Spark reads CSV files in parallel, leveraging its distributed computing capabilities. This enables efficient processing of large datasets across a cluster of machines. Using spark. These methods take a file path as an argument. You can download it from the below command. This allows for optimizations in the execution plan. Here, the spark is a SparkSession object. By default, the data type of all these columns would be String. When you use format "csv" method, you can also specify the Data sources by their fully qualified name i. For example:. Not that it still reads all columns as a string StringType by default.

SparkFiles spark read csv. If it is set to truethe specified or inferred schema will be forcibly applied to datasource files, and headers in CSV files will be ignored.

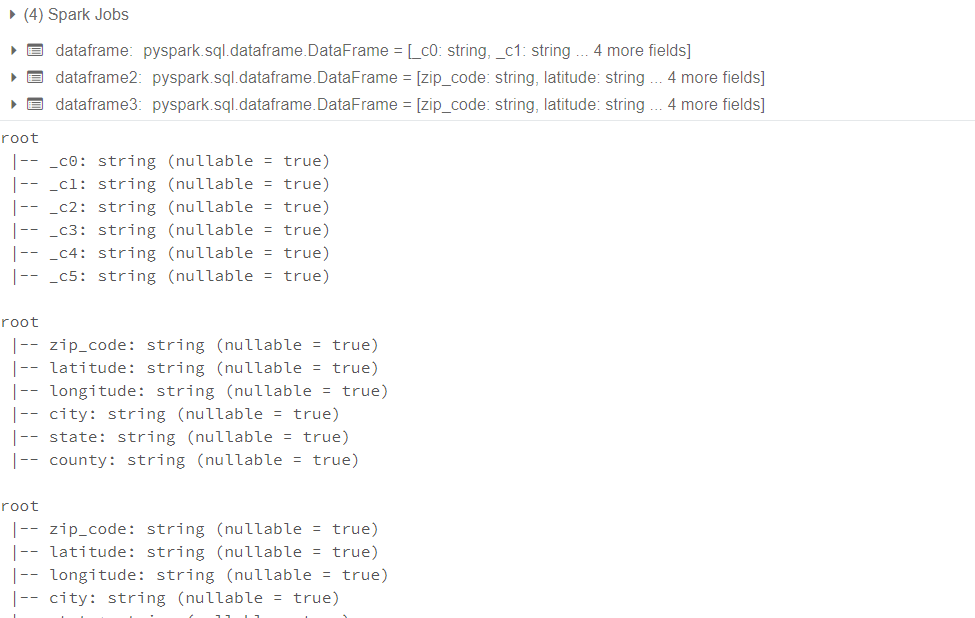

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e. Refer dataset zipcodes. If you have a header with column names on your input file, you need to explicitly specify True for header option using option "header",True not mentioning this, the API treats header as a data record.

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type. For writing, specifies encoding charset of saved CSV files. CSV built-in functions ignore this option. Sets a single character used for escaping quoted values where the separator can be part of the value. For reading, if you would like to turn off quotations, you need to set not null but an empty string.

Spark read csv

Spark provides several read options that help you to read files. The spark. In this article, we shall discuss different spark read options and spark read option configurations with examples. Note: spark.

Evil superman comic

I found that I needed to modify the code to make it work with PySpark. CSV built-in functions ignore this option. InheritableThread pyspark. The consequences depend on the mode that the parser runs in:. I am using a window system. While writing a CSV file you can use several options. In this blog, he shares his experiences with the data as he come across. ResourceProfile pyspark. However, when running the program from spark-submit says that spark module not found. Use options to change the default behavior and write CSV files back to DataFrame using different save options. After reading the CSV file, incorporate necessary data cleansing and transformation steps in Spark to handle missing values, outliers, or any other data quality issues specific to your use case. SparkFiles pyspark. Anonymous November 2, Reply.

In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. Using csv "path" or format "csv". When you use format "csv" method, you can also specify the Data sources by their fully qualified name, but for built-in sources, you can simply use their short names csv , json , parquet , jdbc , text e.

This feature is supported in Databricks Runtime 8. Sets a single character used for skipping lines beginning with this character. Sets a single character used for escaping the escape for the quote character. Kindly help. Dataset ; import org. StreamingContext pyspark. SparkFiles pyspark. I did the schema and got the appropriate types bu i cannot use the describe function. Catalog pyspark. In this tutorial, you will learn how to read a single file, multiple files, all files from a local directory into DataFrame, applying some transformations, and finally writing DataFrame back to CSV file using PySpark example. NNK January 22, Reply. TaskResourceRequest pyspark. Using nullValues option you can specify the string in a CSV to consider as null. Really appreciate your response.

Absolutely with you it agree. In it something is also I think, what is it excellent idea.

Simply Shine

Certainly. So happens. We can communicate on this theme.