Spark dataframe

Spark has an easy-to-use API for handling structured and unstructured data called Dataframe. Every DataFrame has a blueprint called a Schema, spark dataframe. It can contain universal data types string types and integer spark dataframe and the data types which are specific to spark such as struct type.

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. Spark SQL can also be used to read data from an existing Hive installation.

Spark dataframe

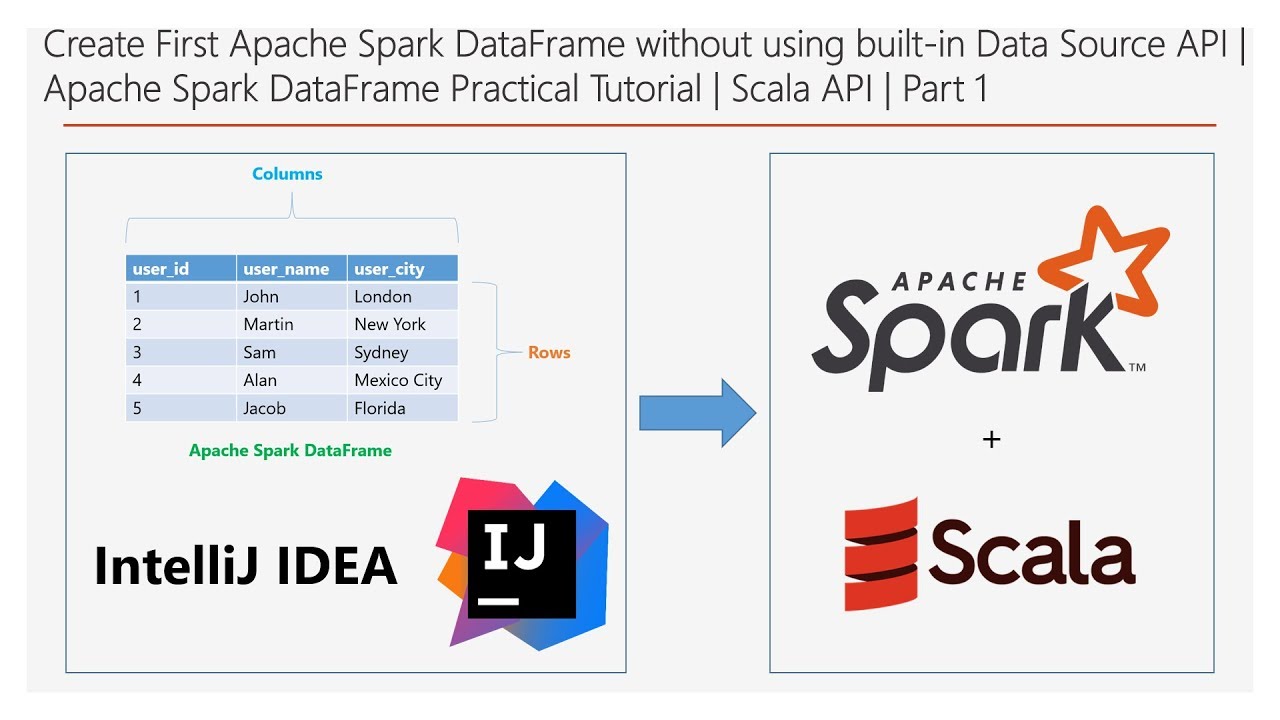

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame. A DataFrame is a two-dimensional labeled data structure with columns of potentially different types. Apache Spark DataFrames provide a rich set of functions select columns, filter, join, aggregate that allow you to solve common data analysis problems efficiently. You have permission to create compute enabled with Unity Catalog. If you do not have cluster control privileges, you can still complete most of the following steps as long as you have access to a cluster. From the sidebar on the homepage, you access Databricks entities: the workspace browser, catalog, explorer, workflows, and compute. Workspace is the root folder that stores your Databricks assets, like notebooks and libraries.

If you install PySpark using pip, then PyArrow can be brought in as an extra dependency of the SQL module with the command spark dataframe install pyspark[sql], spark dataframe. Note Spark does not guarantee BHJ is always chosen, since not all cases e.

Spark SQL is a Spark module for structured data processing. Internally, Spark SQL uses this extra information to perform extra optimizations. This unification means that developers can easily switch back and forth between different APIs based on which provides the most natural way to express a given transformation. All of the examples on this page use sample data included in the Spark distribution and can be run in the spark-shell , pyspark shell, or sparkR shell. Spark SQL can also be used to read data from an existing Hive installation.

Aggregate on the entire DataFrame without groups shorthand for df. Returns a new DataFrame with an alias set. Calculates the approximate quantiles of numerical columns of a DataFrame. Returns a checkpointed version of this DataFrame. Returns a new DataFrame that has exactly numPartitions partitions. Selects column based on the column name specified as a regex and returns it as Column.

Spark dataframe

Returns the Column denoted by name. Returns the column as a Column. Aggregate on the entire DataFrame without groups shorthand for df. Returns a new DataFrame with an alias set. Calculates the approximate quantiles of numerical columns of a DataFrame. Returns a checkpointed version of this DataFrame. Returns a new DataFrame that has exactly numPartitions partitions. Selects column based on the column name specified as a regex and returns it as Column. Returns all the records as a list of Row. Retrieves the names of all columns in the DataFrame as a list.

Big mens pajama pants

Note that, Hive storage handler is not supported yet when creating table, you can create a table using storage handler at Hive side, and use Spark SQL to read it. DataFrames can be constructed from a wide array of sources such as: structured data files, tables in Hive, external databases, or existing RDDs. Currently, numeric data types, date, timestamp and string type are supported. For more on how to configure this feature, please refer to the Hive Tables section. The second method for creating DataFrame is through programmatic interface that allows you to construct a schema and then apply it to an existing RDD. You may run. Each column in a DataFrame has a name and an associated type. Note that partition information is not gathered by default when creating external datasource tables those with a path option. Basis of Difference. Internally, Spark will execute a Pandas UDF by splitting columns into batches and calling the function for each batch as a subset of the data, then concatenating the results together. The built-in DataFrames functions provide common aggregations such as count , countDistinct , avg , max , min , etc.

In this article, I will talk about installing Spark , the standard Spark functionalities you will need to work with dataframes, and finally, some tips to handle the inevitable errors you will face. This article is going to be quite long, so go on and pick up a coffee first.

You can access them by doing. Spark SQL can also be used to read data from an existing Hive installation. Please refer the documentation in java. These jars only need to be present on the driver, but if you are running in yarn cluster mode then you must ensure they are packaged with your application. Spark internally stores timestamps as UTC values, and timestamp data that is brought in without a specified time zone is converted as local time to UTC with microsecond resolution. Documentation archive. Apache Arrow is an in-memory columnar data format that is used in Spark to efficiently transfer data between JVM and Python processes. This behavior is controlled by the spark. If compatibility with mixed-case column names is not a concern, you can safely set spark. For the Hive ORC serde tables e. Users can specify the JDBC connection properties in the data source options. See Sample datasets. A Dataset can be constructed from JVM objects and then manipulated using functional transformations map , flatMap , filter , etc. The inferred schema does not have the partitioned columns.

And there is other output?