Sagemaker pytorch

With MMEs, you can host multiple models on a single serving container and host all the models behind a single endpoint.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. You don't need to change your code when you use the transformers. Trainer class.

Sagemaker pytorch

.

Now that we have all three models hosted on an MME, we can invoke each model in sequence to build our language-assisted editing features. By clicking or navigating, sagemaker pytorch, you agree to allow our usage of cookies.

.

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout. We also introduce a use case of one of the hottest GAN applications in the synthetic data generation area. We hope this gives you a tangible sense on how GAN is used in real-life scenarios. Among the following two pictures of handwritten digits, one of them is actually generated by a GAN model. Can you tell which one? The main topic of this article is to use ML techniques to generate synthetic handwritten digits. To achieve this goal, you personally experience the training of a GAN model.

Sagemaker pytorch

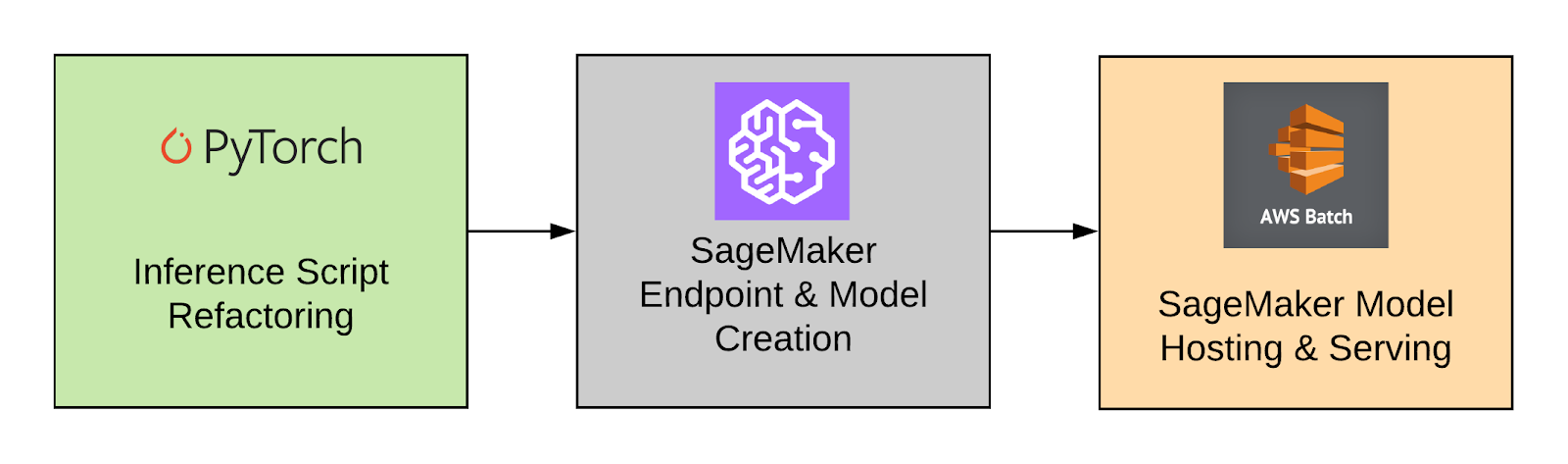

Deploying high-quality, trained machine learning ML models to perform either batch or real-time inference is a critical piece of bringing value to customers. However, the ML experimentation process can be tedious—there are a lot of approaches requiring a significant amount of time to implement. Amazon SageMaker provides a unified interface to experiment with different ML models, and the PyTorch Model Zoo allows us to easily swap our models in a standardized manner.

45.9 kg to lbs

The deploy function creates an endpoint configuration and hosts the endpoint:. The custom installed libraries are listed in the Docker file:. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. The model will then change the highlighted object based on the provided instructions. For a complete list of parameters, refer to the GitHub repo. To follow along with the rest of the post, use the notebook file. Community stories Learn how our community solves real, everyday machine learning problems with PyTorch Developer Resources Find resources and get questions answered Events Find events, webinars, and podcasts Forums A place to discuss PyTorch code, issues, install, research Models Beta Discover, publish, and reuse pre-trained models. This is where our MME will read models from on S3. For more information on SAM, refer to their website and research paper. SGD and torch. It provides additional arguments such as original and mask images, allowing for quick modification and restoration of existing content. The file defines the configuration of the model server, such as number of workers and batch size. Starting PyTorch 1. See also Optimizer in the Hugging Face Transformers documentation. The last required file for TorchServe is model-config.

Module API.

After you are done, please follow the instructions in the cleanup section of the notebook to delete the resources provisioned in this post to avoid unnecessary charges. Document Conventions. This action sends the pixel coordinates and the original image to a generative AI model, which generates a segmentation mask for the object. Because the models require resources and additional packages that are not on the base PyTorch DLC, you need to build a Docker image. Extend the TorchServe container The first step is to prepare the model hosting container. Community Join the PyTorch developer community to contribute, learn, and get your questions answered. The handle method is the main entry point for requests, and it accepts a request object and returns a response object. The user experience flow for each use case is as follows: To remove an unwanted object, the select the object from the image to highlight it. Did this page help you? Please refer to your browser's Help pages for instructions. The main difference for the new MMEs with TorchServe support is how you prepare your model artifacts. In addition to the changes listed in the previous For single GPU training section, add the following changes to properly distribute workload across GPUs. Once you confirm the correct object selection, you can modify the object by supplying the original image, the mask, and a text prompt.

Here indeed buffoonery, what that