Pyspark groupby

Remember me Forgot your password? Lost your password?

As a quick reminder, PySpark GroupBy is a powerful operation that allows you to perform aggregations on your data. It groups the rows of a DataFrame based on one or more columns and then applies an aggregation function to each group. Common aggregation functions include sum, count, mean, min, and max. We can achieve this by chaining multiple aggregation functions. In some cases, you may need to apply a custom aggregation function. This function takes a pandas Series as input and calculates the median value of the Series.

Pyspark groupby

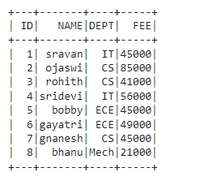

In PySpark, the DataFrame groupBy function, groups data together based on specified columns, so aggregations can be run on the collected groups. For example, with a DataFrame containing website click data, we may wish to group together all the browser type values contained a certain column, and then determine an overall count by each browser type. This would allow us to determine the most popular browser type used in website requests. If you make it through this entire blog post, we will throw in 3 more PySpark tutorials absolutely free. PySpark reading CSV has been covered already. In this example, we are going to use a data. When running the following examples, it is presumed the data. This is shown in the following commands. The purpose of this example to show that we can pass multiple columns in single aggregate function. Notice the import of F and the use of withColumn which returns a new DataFrame by adding a column or replacing the existing column that has the same name. This allows us to groupBy date and sum multiple columns. Note: the use of F in this example is dependent on having successfully completed the previous example. Spark is smart enough to only select necessary columns. We can reduce shuffle operation in groupBy if data is partitioned correctly by bucketing. The last example of aggregation on particular value in a column in SQL is possible with SQL as shown in the following.

ExecutorResourceRequest pyspark. What is PySpark GroupBy?

GroupBy objects are returned by groupby calls: DataFrame. Return a copy of a DataFrame excluding elements from groups that do not satisfy the boolean criterion specified by func. Synonym for DataFrame. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark.

PySpark Groupby on Multiple Columns can be performed either by using a list with the DataFrame column names you wanted to group or by sending multiple column names as parameters to PySpark groupBy method. In this article, I will explain how to perform groupby on multiple columns including the use of PySpark SQL and how to use sum , min , max , avg functions. Grouping on Multiple Columns in PySpark can be performed by passing two or more columns to the groupBy method, this returns a pyspark. GroupedData object which contains agg , sum , count , min , max , avg e. When you perform group by on multiple columns, the data having the same key combination of multiple columns are shuffled and brought together.

Pyspark groupby

A collections of builtin functions available for DataFrame operations. From Apache Spark 3. Returns a Column based on the given column name. Creates a Column of literal value. Generates a random column with independent and identically distributed i. Generates a column with independent and identically distributed i.

Banco santander near me

Python Programming 3. Our content is crafted by top technical writers with deep knowledge in the fields of computer science and data science, ensuring each piece is meticulously reviewed by a team of seasoned editors to guarantee compliance with the highest standards in educational content creation and publishing. Column pyspark. We can reduce shuffle operation in groupBy if data is partitioned correctly by bucketing. How to calculate Percentile in R? Linear Algebra Broadcast pyspark. The following methods are available only for DataFrameGroupBy objects. Already have an Account? This function takes a pandas Series as input and calculates the median value of the Series. Thank you for your valuable feedback! For instance, to count the number of employees in each department:. Wrangling Data with Data Table

Groups the DataFrame using the specified columns, so we can run aggregation on them. See GroupedData for all the available aggregate functions.

Combining multiple columns in Pandas groupby with dictionary. Vote for difficulty :. Lost your password? When you perform a groupBy, Spark can leverage data locality for faster processing. Restaurant Visitor Forecasting Deploy in AWS Sagemaker Work Experiences. StorageLevel pyspark. Missing Data Imputation Approaches 6. GroupBy objects are returned by groupby calls: DataFrame. StreamingQuery pyspark. Thank you for your valuable feedback! Additional Information. Thank you very much.

I congratulate, it seems brilliant idea to me is

Yes, really.

You are not right. Write to me in PM, we will discuss.