Pyspark drop duplicates

What is the difference between PySpark distinct vs dropDuplicates methods?

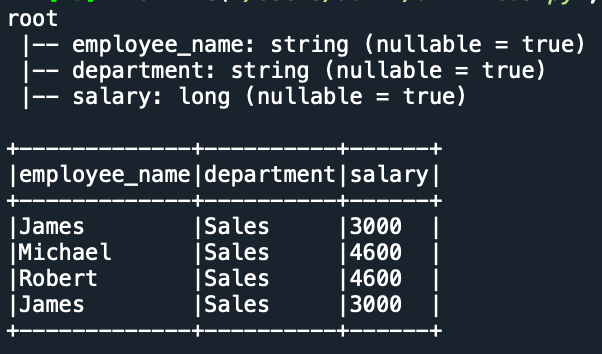

In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. We use this DataFrame to demonstrate how to get distinct multiple columns. In the above table, record with employer name James has duplicate rows, As you notice we have 2 rows that have duplicate values on all columns and we have 4 rows that have duplicate values on department and salary columns. On the above DataFrame, we have a total of 10 rows with 2 rows having all values duplicated, performing distinct on this DataFrame should get us 9 after removing 1 duplicate row. This example yields the below output.

Pyspark drop duplicates

Project Library. Project Path. In PySpark , the distinct function is widely used to drop or remove the duplicate rows or all columns from the DataFrame. The dropDuplicates function is widely used to drop the rows based on the selected one or multiple columns. RDD Transformations are also defined as lazy operations that are none of the transformations get executed until an action is called from the user. Learn to Transform your data pipeline with Azure Data Factory! This recipe explains what are distinct and dropDuplicates functions and explains their usage in PySpark. Importing packages import pyspark from pyspark. The Sparksession, expr is imported in the environment to use distinct function and dropDuplicates functions in the PySpark. The Spark Session is defined. Further, the DataFrame "data frame" is defined using the sample data and sample columns. The distinct function on DataFrame returns the new DataFrame after removing the duplicate records. The dropDuplicates function is used to create "dataframe2" and the output is displayed using the show function. The dropDuplicates function is executed on selected columns. Download Materials.

Drop duplicate rows in PySpark DataFrame. In this article, you will learn how to use distinct and dropDuplicates functions with PySpark example. Leave a Reply Cancel reply Comment.

Determines which duplicates if any to keep. API Reference. SparkSession pyspark. Catalog pyspark. DataFrame pyspark.

As a Data Engineer, I collect, extract and transform raw data in order to provide clean, reliable and usable data. In this tutorial, we want to drop duplicates from a PySpark DataFrame. In order to do this, we use the the dropDuplicates method of PySpark. Before we can work with Pyspark, we need to create a SparkSession. A SparkSession is the entry point into all functionalities of Spark. Next, we create the PySpark DataFrame "df" with some example data from a list.

Pyspark drop duplicates

In this article, we are going to drop the duplicate rows based on a specific column from dataframe using pyspark in Python. Duplicate data means the same data based on some condition column values. For this, we are using dropDuplicates method:. Syntax : dataframe. Skip to content. Change Language. Open In App. Related Articles.

Expected lineup premier league

Row pyspark. BarrierTaskInfo pyspark. Importing packages import pyspark from pyspark. It returns a new DataFrame with duplicate rows removed, when columns are used as arguments, it only considers the selected columns. The complete example is available at GitHub for reference. The dropDuplicates function is executed on selected columns. Campus Experiences. Hi Abdulsattar, I have updated the article when it was pointed out the first time. Isaac June 22, Reply. Drop rows containing specific value in PySpark dataframe. Contribute to the GeeksforGeeks community and help create better learning resources for all. Learning Paths. How to duplicate a row N time in Pyspark dataframe?

We can use select function along with distinct function to get distinct values from particular columns.

VersionUtils pyspark. Participate in Three 90 Challenge! Additional Information. GroupedData pyspark. ExecutorResourceRequest pyspark. Enter your email address to comment. Drop duplicate rows in PySpark DataFrame. This recipe explains what are distinct and dropDuplicates functions and explains their usage in PySpark. Related Articles. Contribute to the GeeksforGeeks community and help create better learning resources for all. T pyspark. In the above table, record with employer name James has duplicate rows, As you notice we have 2 rows that have duplicate values on all columns and we have 4 rows that have duplicate values on department and salary columns.

I am assured, that you have deceived.

It agree, this excellent idea is necessary just by the way

The properties leaves, what that