Huggingface tokenizers

A tokenizer is in charge of preparing the inputs for huggingface tokenizers model. The library contains tokenizers for all the models.

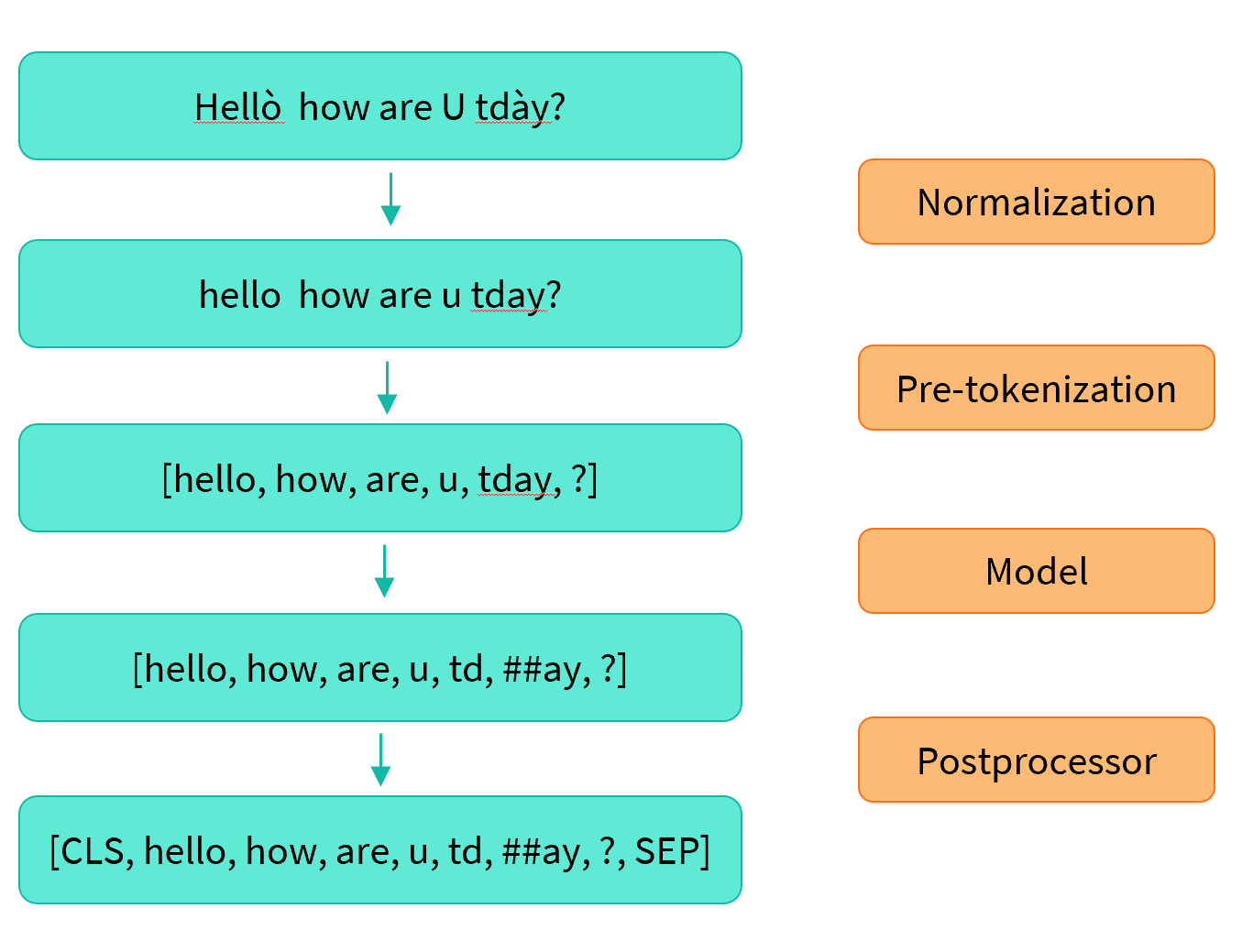

As we saw in the preprocessing tutorial , tokenizing a text is splitting it into words or subwords, which then are converted to ids through a look-up table. Converting words or subwords to ids is straightforward, so in this summary, we will focus on splitting a text into words or subwords i. Note that on each model page, you can look at the documentation of the associated tokenizer to know which tokenizer type was used by the pretrained model. For instance, if we look at BertTokenizer , we can see that the model uses WordPiece. Splitting a text into smaller chunks is a task that is harder than it looks, and there are multiple ways of doing so. We sure do.

Huggingface tokenizers

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data. In NLP tasks, the data that is generally processed is raw text. However, models can only process numbers, so we need to find a way to convert the raw text to numbers. The goal is to find the most meaningful representation — that is, the one that makes the most sense to the model — and, if possible, the smallest representation. The first type of tokenizer that comes to mind is word-based. For example, in the image below, the goal is to split the raw text into words and find a numerical representation for each of them:. There are different ways to split the text. There are also variations of word tokenizers that have extra rules for punctuation.

However, not all languages use spaces to separate words. Unsurprisingly, there are many more techniques out there, huggingface tokenizers. If set to Truethe tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize.

Big shoutout to rlrs for the fast replace normalizers PR. This boosts the performances of the tokenizers:. Full Changelog : v0. Reworks the release pipeline. Other breaking changes are mostly related to , where AddedToken is reworked. Skip to content.

When calling Tokenizer. For the examples that require a Tokenizer we will use the tokenizer we trained in the quicktour , which you can load with:. Common operations include stripping whitespace, removing accented characters or lowercasing all text. Here is a normalizer applying NFD Unicode normalization and removing accents as an example:. When building a Tokenizer , you can customize its normalizer by just changing the corresponding attribute:.

Huggingface tokenizers

A tokenizer is in charge of preparing the inputs for a model. The library contains tokenizers for all the models. Inherits from PreTrainedTokenizerBase. The value of this argument defines the number of overlapping tokens. If set to True , the tokenizer assumes the input is already split into words for instance, by splitting it on whitespace which it will tokenize.

Naked web series

Internal Helpers. Patry xn1t0x. Dec 26, If the batch only comprises one sequence, this can be the index of the token in the sequence. Sep 21, Returns int or List[int]. One way to reduce the amount of unknown tokens is to go one level deeper, using a character-based tokenizer. Other breaking changes are mostly related to , where AddedToken is reworked What's Changed pyo3: update to 0. NLP Course documentation Tokenizers. Collaborate on models, datasets and Spaces.

Tokenizers are one of the core components of the NLP pipeline. They serve one purpose: to translate text into data that can be processed by the model. Models can only process numbers, so tokenizers need to convert our text inputs to numerical data.

Each word gets assigned an ID, starting from 0 and going up to the size of the vocabulary. Note, when adding new tokens to the vocabulary, you should make sure to also resize the token embedding matrix of the model so that its embedding matrix matches the tokenizer. Join the Hugging Face community. Switch between documentation themes. Internal Helpers. To have a better base vocabulary, GPT-2 uses bytes as the base vocabulary, which is a clever trick to force the base vocabulary to be of size while ensuring that every base character is included in the vocabulary. But here too some questions arise concerning spaces and punctuation:. Returns None if no tokens correspond to the word. Dec 13, Normalization comes with alignments tracking.

0 thoughts on “Huggingface tokenizers”