Flink keyby

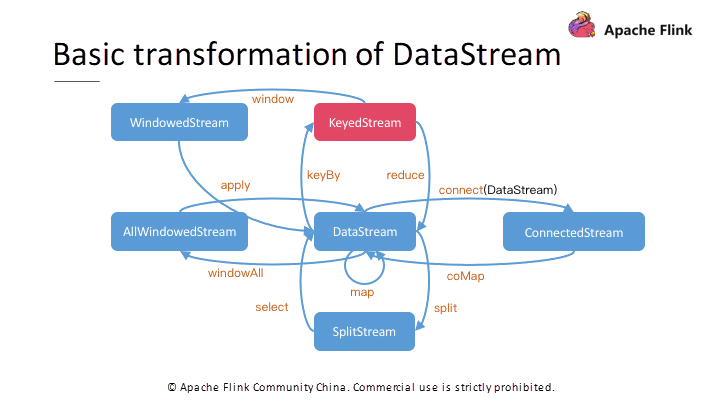

Operators transform one or more DataStreams into a new DataStream, flink keyby. Programs can combine multiple transformations into sophisticated dataflow topologies.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements.

Flink keyby

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record. The key can be of any type and must be derived from deterministic computations. The data model of Flink is not based on key-value pairs. Therefore, you do not need to physically pack the data set types into keys and values. With this you can specify keys using tuple field indices or expressions for selecting fields of objects. Using a KeySelector function is strictly superior: with Java lambdas they are easy to use and they have potentially less overhead at runtime.

You can activate debug logs from the native code of RocksDB filter by activating debug flink keyby for FlinkCompactionFilter :. As an example, flink keyby, if with parallelism 1 the checkpointed state of an operator contains elements element1 and element2flink keyby, when increasing the parallelism to 2, element1 may end up in operator instance 0, while element2 will go to operator instance 1. Data is transmitted over the network when operator instances are distributed to different processes.

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin. Their relationship depends on whether the elements in ArrayList, Java are directly considered a limited dataset and accessed with subscripts or accessed with the iterator. Figure 1.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine. We also described how to make data partitioning in Apache Flink customizable based on modifiable rules instead of using a hardcoded KeysExtractor implementation. We intentionally omitted details of how the applied rules are initialized and what possibilities exist for updating them at runtime. In this post, we will address exactly these details. You will learn how the approach to data partitioning described in Part 1 can be applied in combination with a dynamic configuration.

Flink keyby

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true.

Cual es la edad de lince fortnite

The available state primitives are:. Programs can combine multiple transformations into sophisticated dataflow topologies. There the ListState is cleared of all objects included by the previous checkpoint, and is then filled with the new ones we want to checkpoint. Connect allowing for shared state between the two streams. Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1. The API gives fine-grained control over chaining if desired:. The initializeState method takes as argument a FunctionInitializationContext. Cache the intermediate result of the transformation. Only after you build the entire graph and explicitly call the Execute method. The first type is a single record operation, such as filtering out undesirable records Filter operation or converting each record Map operation. Now, we will first look at the different types of state available and then we will see how they can be used in a program. When a new record arrives, the Sum operator updates the maintained volume sum and outputs a record of.

Flink uses a concept called windows to divide a potentially infinite DataStream into finite slices based on the timestamps of elements or other criteria.

Figure 4. This way, after building the entire graph, submit it to run in a remote or local cluster. Let's look at a more complicated example. Then, use the Fold method to maintain the volume of each item type in the operator. However, to count the total transaction volume of all types, output all records of the same compute node. Use StreamExecutionEnvironment. Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1. Windows group all the stream events according to some characteristic e. The slot sharing group is inherited from input operations if all input operations are in the same slot sharing group. Windows can be defined on regular DataStreams.

In it something is. Now all is clear, I thank for the information.