Ffmpeg threads

Anything found on the command line which cannot be interpreted as an option is considered to be an output url. Selecting which streams from which inputs will go into which output is either done automatically or with the -map option see the Stream selection chapter. To refer to input ffmpeg threads in options, ffmpeg threads, you must use their indices 0-based. Similarly, streams within a file are referred to by their indices.

Opened 10 years ago. Closed 10 years ago. Last modified 9 years ago. Summary of the bug: Ffmpeg ignores the -threads option with libx It is normal for libx to take up more CPU than libx This does not mean that libx is not using multithreading. With both builds Zeranoe and mine and both commands, i've got the same problem with -threads option: it uses both threads instead only one.

Ffmpeg threads

It wasn't that long ago that reading, processing, and rendering the contents of a single image took a noticeable amount of time. But both hardware and software techniques have gotten significantly faster. What may have made sense many years ago lots of workers on a frame may not matter today when a single worker can process a frame or a group of frames more efficiently than the overhead of spinning up a bunch of workers to do the same task. But where to move that split now? Basically the systems of today are entirely different beasts to the ones commonly on the market when FFmpeg was created. This is tremendous work that requires lots of rethinking about how the workload needs to be defined, scheduled, distributed, tracked, and merged back into a final output. Kudos to the team for being willing to take it on. FFmpeg is one of those "pinnacle of open source" infrastructure components that civilizations are built from. I assume 1 process with 2 threads takes up less space on the die than 2 processes, both single threaded. If this is true, a threaded solution will always have the edge on performance, even as everything scales ad infinitum. LtdJorge 3 months ago root parent next [—]. Hardware threads are not the same as software threads. Thanks Capt Obvious. LtdJorge 76 days ago root parent next [—]. I don't understand your comment then.

I'm looking for a more versatile dd replacement.

Connect and share knowledge within a single location that is structured and easy to search. What is the default value of this option? Sometimes it's simply one thread per core. Sometimes it's more complex like:. You can verify this on a multi-core computer by examining CPU load Linux: top , Windows: task manager with different options to ffmpeg:. So the default may still be optimal in the sense of "as good as this ffmpeg binary can get", but not optimal in the sense of "fully exploiting my leet CPU. Some of these answers are a bit old, and I'd just like to add that with my ffmpeg 4.

The libavcodec library now contains a native VVC Versatile Video Coding decoder, supporting a large subset of the codec's features. Further optimizations and support for more features are coming soon. Thanks to a major refactoring of the ffmpeg command-line tool, all the major components of the transcoding pipeline demuxers, decoders, filters, encodes, muxers now run in parallel. This should improve throughput and CPU utilization, decrease latency, and open the way to other exciting new features. Note that you should not expect significant performance improvements in cases where almost all computational time is spent in a single component typically video encoding. FFmpeg 6. Some of the highlights:. This release had been overdue for at least half a year, but due to constant activity in the repository, had to be delayed, and we were finally able to branch off the release recently, before some of the large changes scheduled for 7. Internally, we have had a number of changes too. This also led to a reduction in the the size of the compiled binary, which can be noticeable in small builds.

Ffmpeg threads

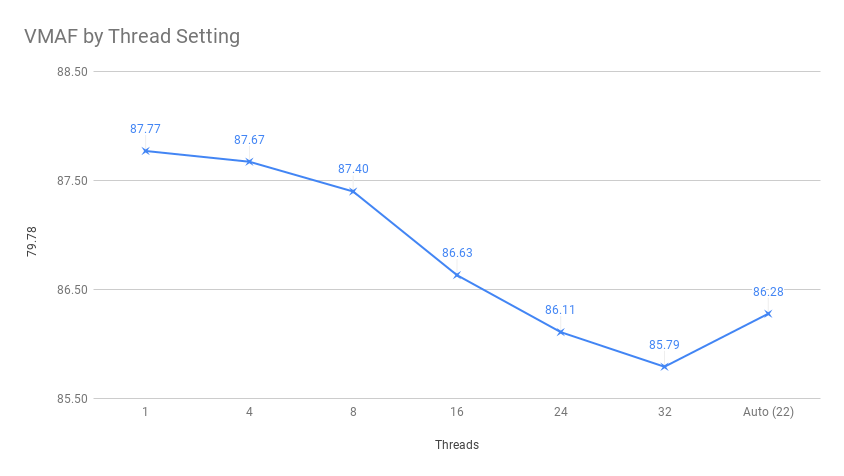

This article details how the FFmpeg threads command impacts performance, overall quality, and transient quality for live and VOD encoding. As we all have learned too many times, there are no simple questions when it comes to encoding. So hundreds of encodes and three days later, here are the questions I will answer. On a multiple-core computer, the threads command controls how many threads FFmpeg consumes. Note that this file is 60p. This varies depending upon the number of cores in your computer. I produced this file on my 8-core HP ZBook notebook and you see the threads value of For a simulated live capture operation, the value was Pretty much the way you would expect it to. In a simulated capture scenario, outputting p video 60 fps using the -re switch to read the incoming file in real time you get the following.

Pizza collioure

No other streams will be included in this output file. So who wants to pay the cost for that tradeoff? I haven't found the command yet, but it does support it. When stats for multiple streams are written into a single file, the lines corresponding to different streams will be interleaved. When this tool is invoked, we stream the video of these browser interactions with FFmpeg by streaming the virtual display buffer the browser is using. Could be useful for others. They're fundamentally predict-the-next-token machines; they regurgitate and mix parts of their training data in order to satisfy the token prediction loss function they were trained with. I produced this file on my 8-core HP ZBook notebook and you see the threads value of Const-me 3 months ago prev next [—]. It is normal for libx to take up more CPU than libx This is done with the -dec directive, which takes as a parameter the index of the output stream that should be decoded. Enabled by default, use -noautoscale to disable it. GPT-4 turbo now have k context and I can provide it with larger portions of the code base for the refactors. If each thread needed to process the frame separately, you would need to make significant changes to the codec, and I hypothesize it would cause the video stream to be bigger in size.

As of it has inhabitants. The village is located near Cape Atia, thus its name. It is situated at the northern foothills of Medni Rid Ridge, which is the north-eastern extreme of the Bosna Ridge in the Strandzha Mountains.

Finally, those are passed to the muxer, which writes the encoded packets to the output file. Note, however, that since both examples use -c copy , it matters little whether the filters are applied on input or output - that would change if transcoding was happening. I'll try an older build, because maybe is a problem of lastest build. In most codecs the entropy coder doesn't reset across frames, so there is enough freedom that you can do multithreaded decoding. Was that a separate branch? I had to stop drinking my decaf for fear of spitting it all over my computer I was laughing out loud so much! I think it's normal. Lots of the time I'd be happy to use tons of RAM and output with a fixed keyframe interval. Defines how many threads are used to process a filter pipeline. Live content also probably makes it hard.

Seriously!